I’ve been messing around with AI models for the past three years. Like everyone else, I was amazed by the original GitHub Copilot release, but what interested me a lot more was running small models like Mistral-7b on my local machine.

Combined with some of those early “agent” frameworks like LangChain, I played around with building my own local assistant . I strapped this CLI chatbot with a Wolfram Alpha tool and a DuckDuckGo search tool so it could answer some of the world’s most burning questions like: “What is the current age of Justin Bieber multiplied by the current age of Oprah Winfrey?” and get a successful answer about 40% of the time. Something ChatGPT at the time could not do, all on a model running on my machine! I felt pretty cool about that.

Looking back, my mental model about AI capabilities used to be that any LLM, even a small one, provided some quantifiable units of “intelligence”. And with enough tools, time, delegation with sub-agents, even a model running locally can add enough intelligence units to work towards any arbitrarily complex goal. We just need to find the right harness . This honestly made me a bit nervous about my future career.

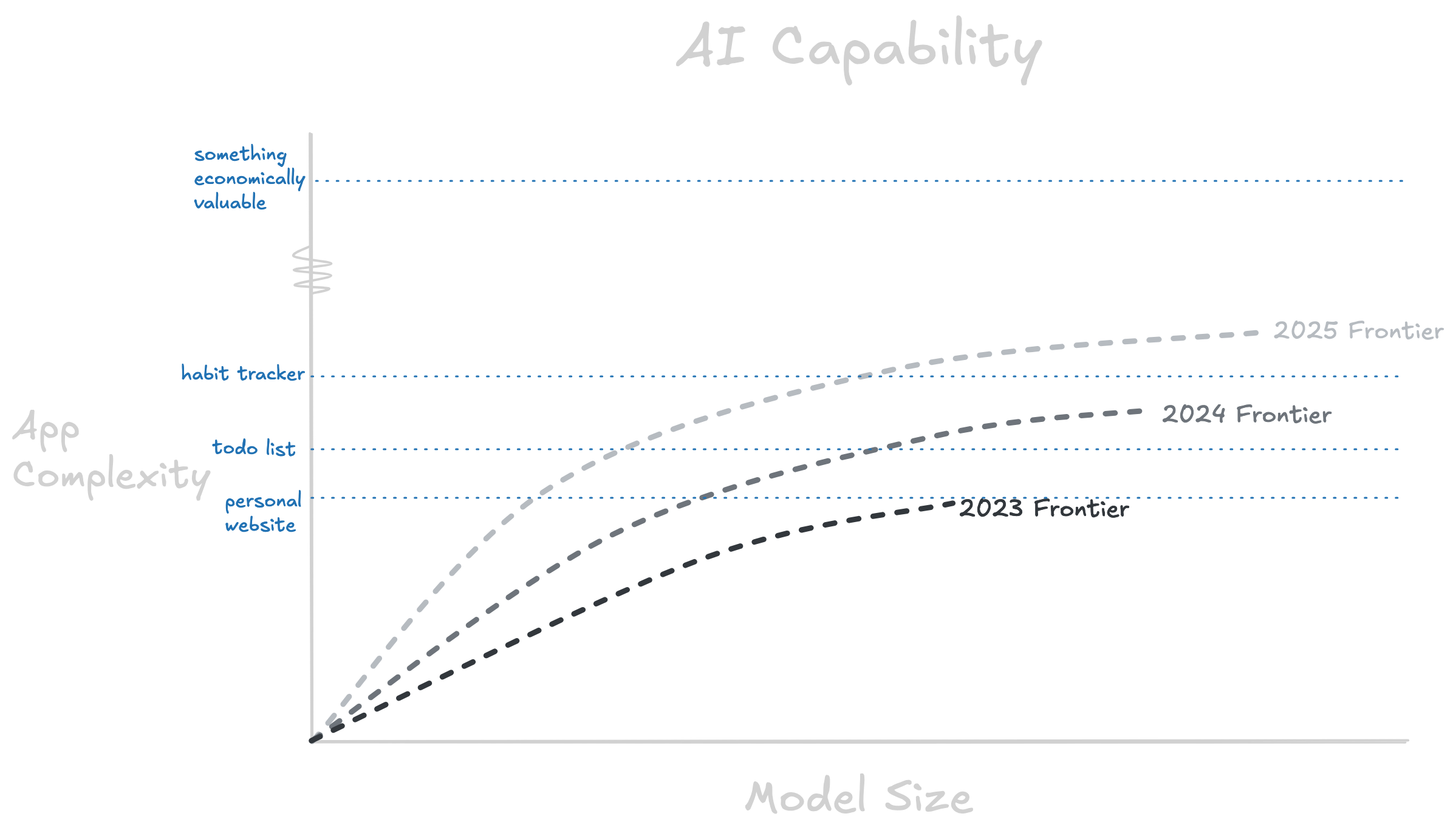

Fast forward to today, I’ve learned a ton about software engineering all while being 31 months into 6 months from AI taking my job , and I realize my previous mental model was not the correct way of thinking. It’s now shifted to something more like this (forgive the Apple-style level-of-detail):

To be clear, I’m talking about any non-technical English speaker’s ability to produce software using AI, not a Software Engineer using Cursor. Even with the theoretical best possible harness, frontier AI models still reach this asymptotic limit of complexity they can handle, no matter how much time or money is spent on it. We can consider things like simple todo list apps “solved” as their complexity is well below the asymptote of the frontier. But we are still far from AI agents being capable of making real production apps on their own.

Even if a new model raises this limit, you still by nature need a human software engineer in the loop to build anything of real economic value. If the value of apps under the curve eventually comes down to token cost, the only way to create excess economic value under the curve would be with human differentiation. This means the smarter models get, the more important it is to have human differentiation in your app.

But we’re still a ways out from worrying about that, today I think anyone who’s tried vibe coding knows what I’m talking about: You have a cool idea and go from 0 to MVP with AI, and it’s honestly really impressive. So you get a bit more ambitious, you keep adding more features, they seem to work, all is well. But suddenly the model hits a wall. It can’t one-shot the next feature. It spins its wheels claiming whatever bug is fixed even though it’s not. A feature that was working fine mysteriously broke even though you told it to “make no mistakes”. At this point, you look at the code for the first time and realize it’s complete spaghetti, and it’s probably easier just to restart.

Why does this happen? I think there are a few main reasons that won’t change anytime soon:

Context length is a hard limit

This is self-evident, but as soon as your app is larger than what can fit into a single request, it’s inherently impossible for an LLM to properly consider the whole system when writing new features. So even an incredible coding model will eventually start introducing tech debt if left alone. Of course the harness can help (Cursor’s codebase indexing is pretty incredible) But it doesn’t totally solve it.

The longer an LLM runs, the higher the chance of “slop”

Even with all the latest innovations in reasoning and reinforcement learning, LLMs still just probabilistically predict the next token. With each next token, there is a strong probability that it’s the “right” one, but some small chance that it’s the wrong one. As the model traverses this branching tree, it might start down the wrong path and have a compounding probability of future errors. Here’s a really great visualization of that variability. Extrapolate that to a whole production codebase.

How do you combat this? You need a human in the loop, someone who knows what “correct” is. Especially if you want software that doesn’t just “work” but is well engineered.

Even with the best harness, LLMs just aren’t designed for long-term system thinking and planning.

I think we under-appreciate a good software engineer’s subconscious long-term memory of a codebase. When I’m building a new feature in a 2 million LOC app, I’m doing so with the memory of all the other code and patterns I’ve seen. I also remember the feeling of pain dealing with previously hacked solutions. Right now, LLMs just don’t have this same ability, and I suspect it’s difficult to reinforcement-learn it the way you can math/code correctness. They need a software engineer actively steering them and reviewing their code. Until an AI lab comes up with a different type of architecture, I don’t think today’s LLMs are going to ever get away from this problem.

Ultimately, I think using AI is a technical skill just like learning your IDE shortcuts. For a good software engineer it can be a huge productivity multiplier, but we still need to own our output and be the ones in control. Software engineering is about so much more than just writing code. It’s about designing systems, evaluating tradeoffs, and most importantly deciding what is worth building. So even with the best coding LLM in the world, it will always be way more valuable in the hands of a software engineer than in the hands of anyone else.